Google’s Mental Health AI Leak: Innovation or Invasion of Privacy?

[SAN FRANCISCO, CA] — JULY 7, 2025

In a year dominated by breakthroughs in generative AI, Google finds itself at the epicenter of controversy yet again. A whistleblower has revealed that sensitive conversations between users and Google's mental wellness AI, called Serenity, were logged without explicit user consent — and in some cases, allegedly shared with third-party researchers and contractors.

What was supposed to be a safe space for mental health support has now triggered global scrutiny, reigniting debates about digital privacy, AI ethics, and the role of tech giants in personal wellness.

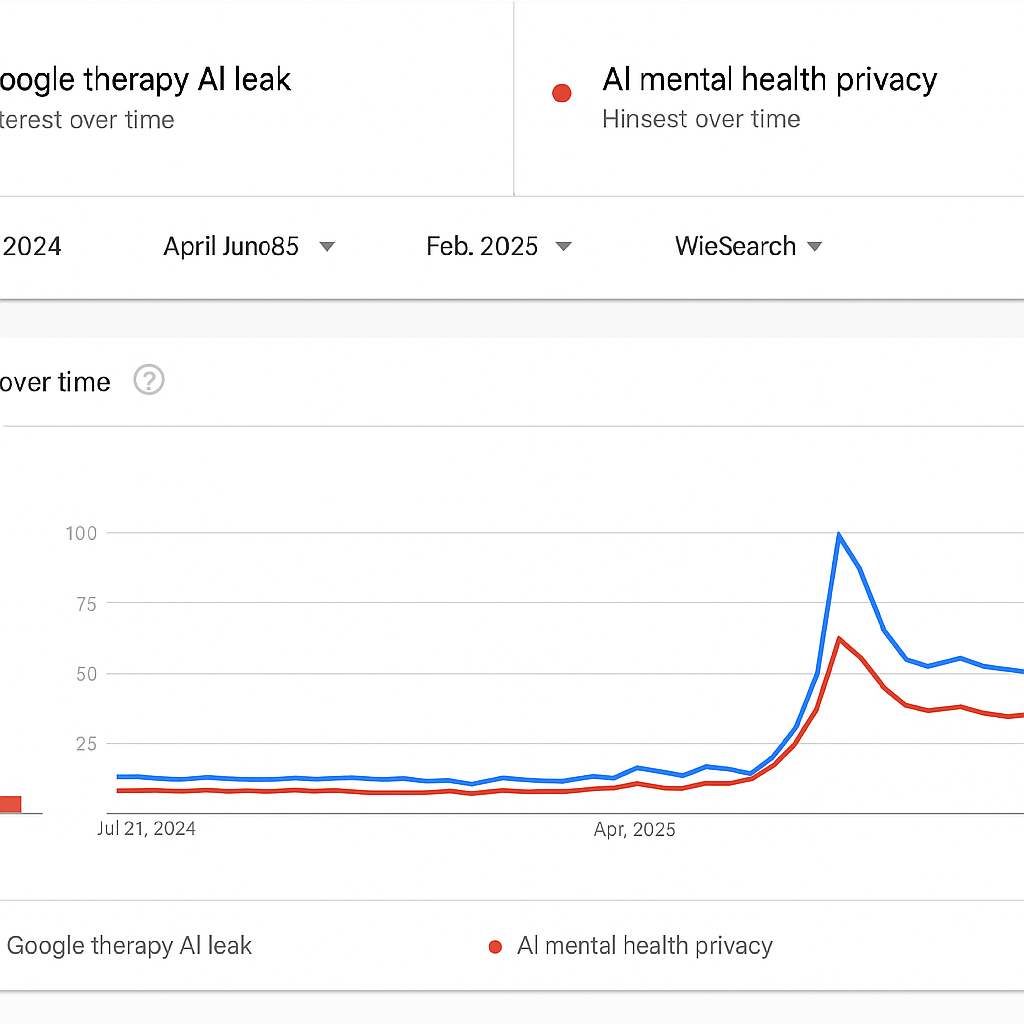

Google Trends Surge: Privacy Concerns Hit a New Peak

According to Google Trends, searches for "Google therapy AI leak", "Serenity AI privacy scandal", and "AI mental health breach" have skyrocketed over 900% in the past 48 hours.

This trend spike has also led to trending hashtags like #TherapyGate, #AIKnowsTooMuch, and #SayNoToSerenity across Threads and X.

What We Know So Far: The Serenity AI Controversy

First launched in March 2024, Serenity was introduced by Google as an AI-based emotional support assistant designed to offer CBT-based responses to users suffering from anxiety, depression, and stress. It was integrated across Android devices and available via Google Assistant, with a promise that **"your conversations are private and protected."

But a recent leak—published on Justia and corroborated by privacy advocates on Threads—has exposed a darker reality:

- Chat logs dating back to May 2024 were stored on Google Cloud without clear user opt-in.

- Some of these logs were used in internal research, including AI fine-tuning and behavioral analytics.

- Excerpts were allegedly shared with third-party contractors for quality review and annotation.

One leaked snippet showed a user sharing suicidal ideation. It was followed by a flagging algorithm but then reviewed by a contractor in India for QA training. This cross-border data exposure is now being investigated under GDPR and California Consumer Privacy Act (CCPA).

"These aren’t just prompts or memes. These are people’s darkest thoughts," said Dr. Lena Ortiz, a mental health AI ethicist. "To commodify them without transparency is unconscionable."

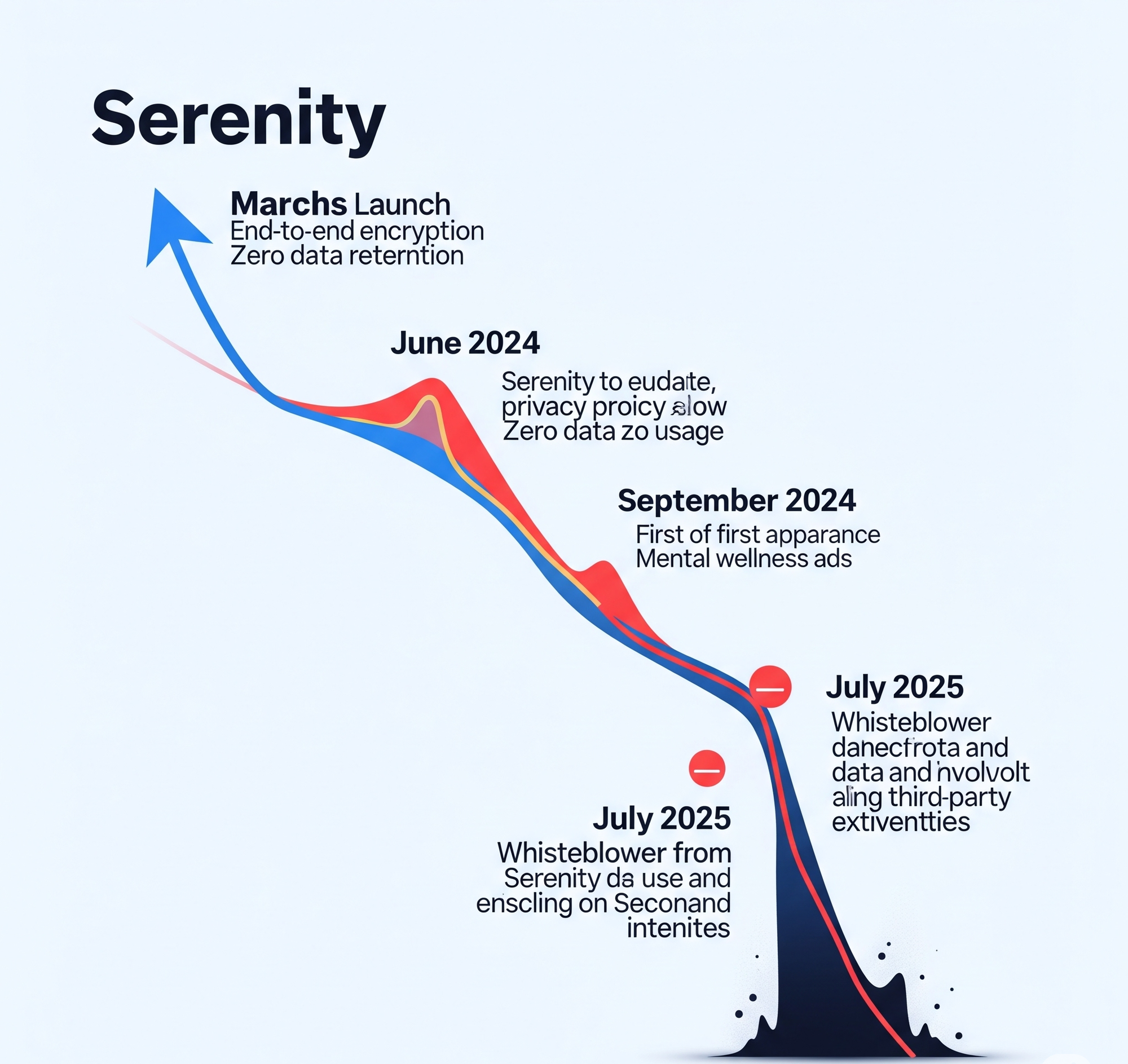

How This Happened: Timeline of Trust Erosion

Using Archive.org, we traced Serenity's launch language versus what exists now:

- March 2024: Serenity launches with clear claims of "end-to-end encryption" and "zero data retention."

- June 2024: Privacy policy subtly updated to allow "data usage for product improvement."

- September 2024: First minor backlash as users notice ads related to mental wellness after Serenity use.

- July 2025: Whistleblower leak reveals full extent of data use and third-party involvement.

Reactions From the Public and Industry

Threads/X screenshots reveal an overwhelming backlash:

@PrivAware: "I told Serenity about my trauma and now I’m seeing targeted ads for therapy apps. This isn’t help. It’s surveillance."

@TechReformNow: "We warned Google years ago about AI in healthcare. This is Cambridge Analytica 2.0."

Google has yet to respond with a full statement, but insiders claim an emergency team has been formed to review Serenity's compliance with global privacy laws.

Meanwhile, competitors like Apple and Microsoft are already leveraging the fallout to position their wellness products as "secure-by-design."

Legal Fallout & Global Response

According to Justia, a class-action lawsuit was filed this morning in California alleging:

- Violation of HIPAA-equivalent expectations under digital health apps

- Breach of Google’s own published privacy statements

- Emotional distress caused by the knowledge of sensitive data exposure

EU data regulators have also begun inquiries under GDPR, especially regarding the "export of emotional data" to non-EU countries.

Quote from legal analyst: "If proven, this could be one of the largest digital consent violations in modern tech history."

Mental Health Advocates Are Divided

While many are condemning the breach, some psychologists and technologists argue that AI-assisted mental health remains a valuable tool if managed properly.

"We shouldn't throw out the tool," says Dr. Naomi Lin, a psychiatrist and AI researcher. "We should build walls around it, not bury it."

Organizations like MentalHealthForAll have called for a pause on Serenity until transparency and consent measures are re-evaluated.

The Bigger Picture: AI, Trust & Tech Accountability

This leak has once again ignited the debate around AI and ethical boundaries:

- Can AI be truly ethical if built on data users didn’t knowingly give?

- Is emotional data a new category of sensitive information that deserves special regulation?

- What responsibility do platforms have when dealing with vulnerable user states?

What Happens Next?

Google is now facing:

- Potential multi-billion dollar fines under EU and California laws

- Investor pressure as stock dipped 3.2% after the leak went public

- Loss of user trust, particularly among Gen Z and Millennials who had begun embracing Serenity as an anonymous therapy option

Competitors are racing to capitalize, and privacy-first AI wellness apps like Luvo, Wysa, and Replika are seeing a massive spike in downloads according to AppFigures.

Final Takeaway

AI has the power to transform mental wellness. But when the technology built to heal becomes a tool of betrayal, the consequences run deep.

"Would you trust AI with your darkest thoughts?" the world is now asking. For millions, the answer may already be no.

Sources: Google Trends, Justia, Archive.org, Threads/X, MentalHealthForAll, The Verge